[2024-03] Intelligent NPC

Note: To protect commercial confidentiality, public information is limited.

Project Introduction

This is a commercially viable demo showcasing an innovative application of VR-guided virtual docents in museums and exhibitions. The system replaces human guides with AI-driven digital avatars capable of leading visitors and interpreting exhibits, while prioritizing human-like behavior and personality for an immersive experience.

The video below demonstrates the feasibility of digital humans controlled by instruction.

Key highlights include:

Tool-Use based agent

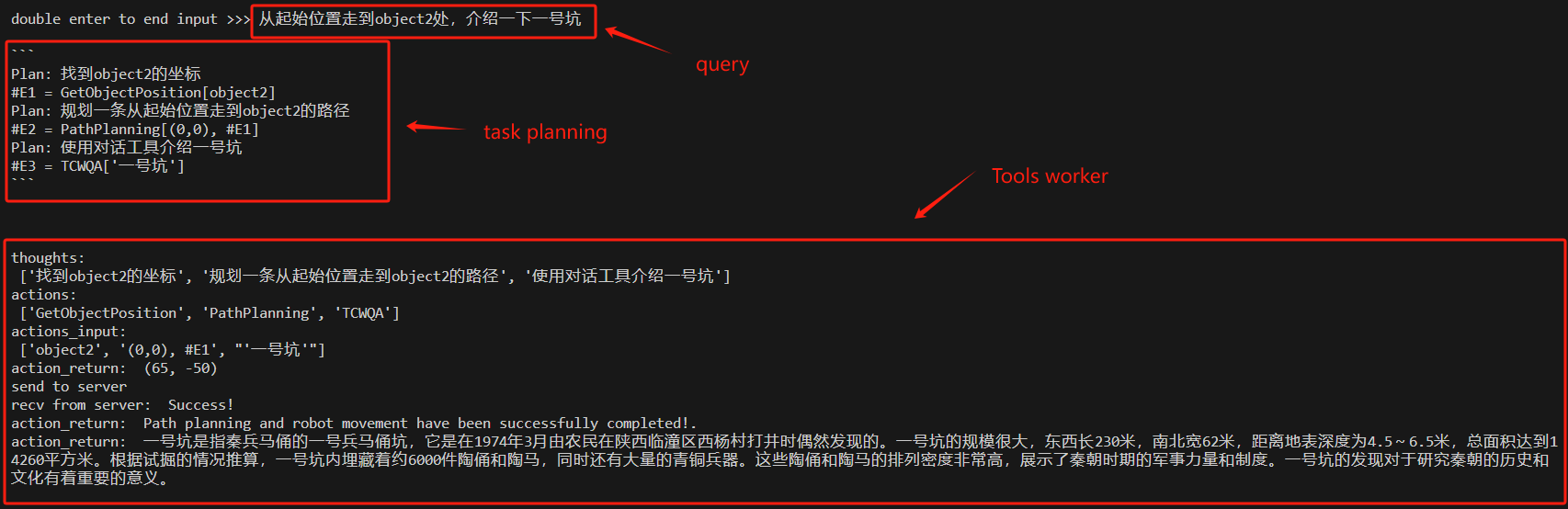

We experimented with React, ReWoo, and LLM (which supports function calling), and incorporated research on task planning, such as Reflection. Based on this, we designed our own task planning and execution framework (disclosure prohibited). This framework prioritizes maximizing instruction completion success rates.The following is a simple Rewoo-based task planning example. It turns out that before a true World Model is available, the accuracy rate of a single-shot plan is extremely low. However, the inefficiency of repeated planning is unacceptable. Therefore,

reflection,decoupled of planning and execution, andlimited error correctionare crucial in task planning.

- Custom Toolset

- Dynamically mounted multimodal RAG QA tool

- Avatar motion generation toolkit

- Path-planning navigation system

- Enhanced User Experience

- Safety-grounded responses

- Real-time latency optimization

- Personality customization

- Proactive itinerary planning

- Real-time ASR & TTS